There was a time when organizations across the globe raised concerns and gave excuses to avoid using containers in their production workloads. Some of the common issues that these organizations feared:

- Difficulty in moving data securely between locations,

- Unpredictable performance,

- Inability to scale up storage with apps

But today, things have taken a drastic change since Docker has come into the picture. It has completely revolutionized container technology by making them highly acceptable in the developers’ community, with millions of containers downloaded regularly. But what exactly is Docker?

What is Docker?

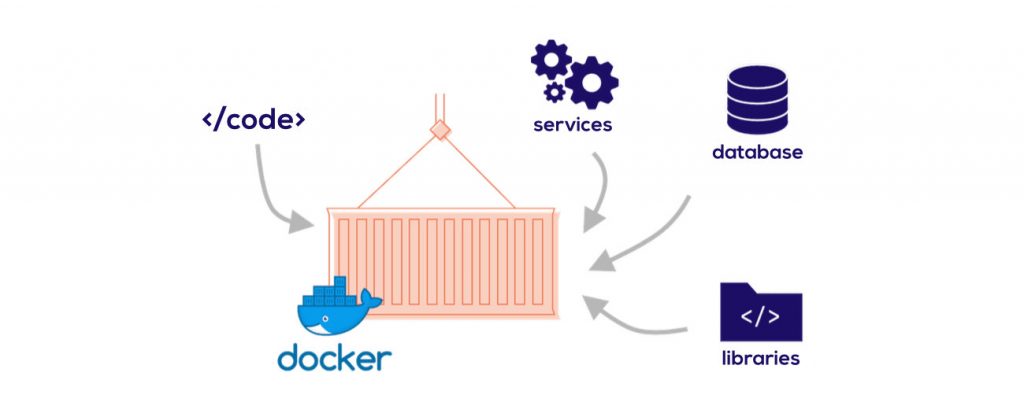

Docker is an open-source project that helps deliver software packages (containers) in the OS level virtualization. Containers are separate from one another and are a bundle constituting software, configuration files, and libraries. They communicate with each other through well-defined channels. All the containers share the service on a single OS, and they utilize far fewer resources than virtual machines.

Developing a new application requires so much more than just writing code. Multiple programming languages, architecture, framework, and the discontinuous interface between tools for every lifecycle stage make it very complex. Docker accelerates and simplifies your workflow. At the same time, it gives developers the freedom to innovate with tools of their own choice, deployment environments, and application stack for each project.

What is Docker logging?

If you are building a containerized application, then logging is a necessity more than a luxury. Teams can debug and troubleshoot issues way quicker using log management. Also, log management helps identify patterns with ease, find bugs, and helps to keep a vigilant eye on the solved bugs, so they don’t reappear.

Docker has multiple logging mechanisms that will help you attain quick information on containers and services. Each container uses its own logging driver unless you configure it to use a different logging driver. You can also use Docker’s logging mechanism to debug issues on the spot.

Docker logging has several sub-methods which help manage the application logs very effectively. Whereas in traditional application logging, there are only a handful of methods. Docker logging helps the organization store logs as a directory using data volumes, which will hold the data even in crucial times, like when a container shuts down or fails.

Challenges of Docker Logging

Every process has its limitations; a single entity can’t be flawless. However, when you consider the applications and benefits of docker logging, the constraints are so minute that it often goes unnoticed.

Challenges in docker logging can be manifold. For instance, a person can face fresh challenges every time in log parsing while using logging drivers. Also, if you want to inspect a file using the command “docker logs,” it won’t show relevant results in every case. The reason – it works only with the logging driver of the JSON file. Another limitation is the unavailability of multi-line support in docker logging drivers.

Docker logging eliminates the dependency issues during application delivery by isolating the component of the application inside the container itself.

However, the application can still face problems during deployment and performance.

Most of the time, the containers start doing multiple processes. The containerized application soon starts to generate a mix of log streams. These log streams can contain unstructured docker run logs, plain text, and structured logs in various formats. The development teams will face problems tracking, identifying, and mapping log events with the corresponding app generating them. This hassle makes log-parsing challenging and slow. A number of docker container logs data from the docker swarm will boost the complexity while managing and analyzing these logs.

The above challenges can easily be solved by using a cloud-based centralized log management tool. The cloud-based tool will help in simplifying log management. It also provides a filter and search option to streamline troubleshooting and to parse the logs. The event viewer in the toll can be used to get a real-time view.

Along with this tool, incorporate the below best practices to avoid unnecessary challenges in your docker logging efforts.

Best Practices in Docker Logging

Use of Application based logging

If your team is working with the traditional application environment, then application-based logging would be extremely helpful. Developers will be at an advantage by having more control over the logging events. There is no additional functionality required to transfer the docker container logs to the host in application-based logging.

Making use of docker log driver

Docker log driver is a log management mechanism offered by the Docker containers. A developer can obtain crucial information regarding the services and applications containerized by using this mechanism. The log events can be directly read from the container output using logging drivers. Moreover, a lot more functionalities can be added to the docker engine by the developer through the use of dedicated plugins.

Using data volumes

Usually, the containers are only available for a short amount of time in nature. All the files and data logs present inside the container are completely lost and cannot be retrieved if the container fails to function.

The developers have an essential role in keeping the data secured from getting lost during failures. They accomplish the task of keeping the data inside the container secured by using data volumes.

These are designated directories present within the containers and are used to store commonly shared log events and persistent data. The probability of data loss is reduced drastically by using data volumes, as it facilitates the ability to share the data with other containers with ease.

Sidecar Approach

Sidecar is one of the popular services that is deployed along with the application present within the container. This service will help the developers in a plethora of ways. Sidecar allows you to add multiple capabilities to the primary application, and there is no need for installing any other additional configurations. To increase the application’s functionality, one can use the sidecar as an attachment to the parent application and run the sidecar as a secondary process. This approach is vital for substantial application deployments from a logging standpoint where there is a need for specific logging information.

Dedicated logging container

It would be way easier to perform log management within the docker container if there is a dedicated logging container. It helps integrate, analyze, monitor, and transfer docker logs to a file or a centralized location. Development teams predominantly use this approach to effectively retrieve and scale log events and manage docker container logs. More importantly, there is no requirement for installing a configuration code to perform such functions in logging containers.

Centralized log management

Earlier, it was easy for IT administrators to analyze the docker container logs using simple awk and grep commands; they could also use secure shell protocol to travel through different servers and perform these operations. These commands exist and function in the same way as before. So what’s the issue with them, you may ask?

Today we have several containers generating a considerable volume of docker container logs. The modern microservices and container-based architecture are way more complex and complicated, So the traditional log analysis is not suitable for today’s world. As a result, log aggregation and analysis have become very challenging.

To perform effective and efficient analysis of such high-volume logs, there is a need to use cloud-based centralized log management tools. The same tools can also be utilized to manage infrastructure logs like the docker engine, containerized infrastructure services, and much more. With both infrastructure logs and applications in a single place, the team can easily monitor, correlate the data, find anomalies, and troubleshoot issues rapidly.

Customization of Log Tags

To solve a random issue, the team needs to monitor an endless stream of logs and find the information for solving the problem. This is a daunting task. The organization can ease this process of collecting logs from many containers simply by tagging their logs using the first 12 digits of the container ID. The tags can also be further customized using various container attributes to simplify the search.

Security & Reliability

The modern-day log analysis tools make it very easy to access many log data and get quick results through text searches. However, the application logs contain sensitive data, which must be kept secured. To secure this, the messages sent from the Syslog connection should be encrypted.

When used with TCP or TLS, the Syslog driver is a safe method for the delivery of logs. The real-time monitoring can be interrupted due to temporary network issues or network latency. The docker Syslog driver blocks the deployment of the container and loses the log data when the Syslog server is unreachable. To solve this problem, the team can install a Syslog server in the host, or they can also use dedicated Syslog containers that will send the logs to a remote server.

Conclusion

Docker containers have made the process of moving software from production to live environment seamless. They have done it by addressing challenges faced in traditional processes. Since all the configuration files, dependencies, and libraries required to run the application are clubbed together with the application in the container, it has become easy to ship the software with no issues.

Want to know more about cloud security? Check out our other blogs on cloud security, or contact us now.